(논문 요약) One Initialization to Rule them All: Fine-tuning via Explained Variance Adaptation (Paper)

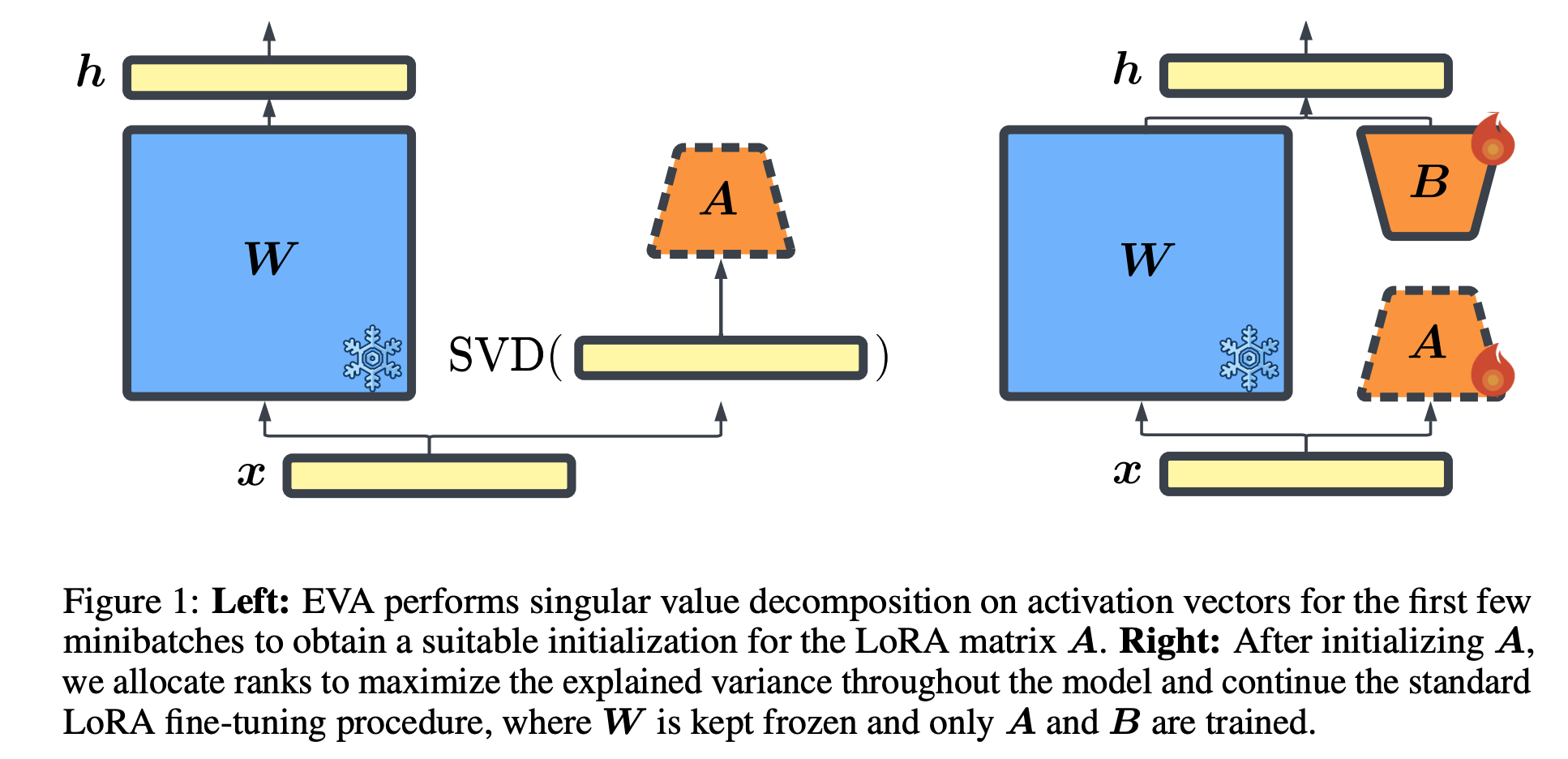

핵심 내용

- In LoRA, initialize the new weights in a data-driven manner

- by computing singular value decomposition on minibatches of activation vectors.

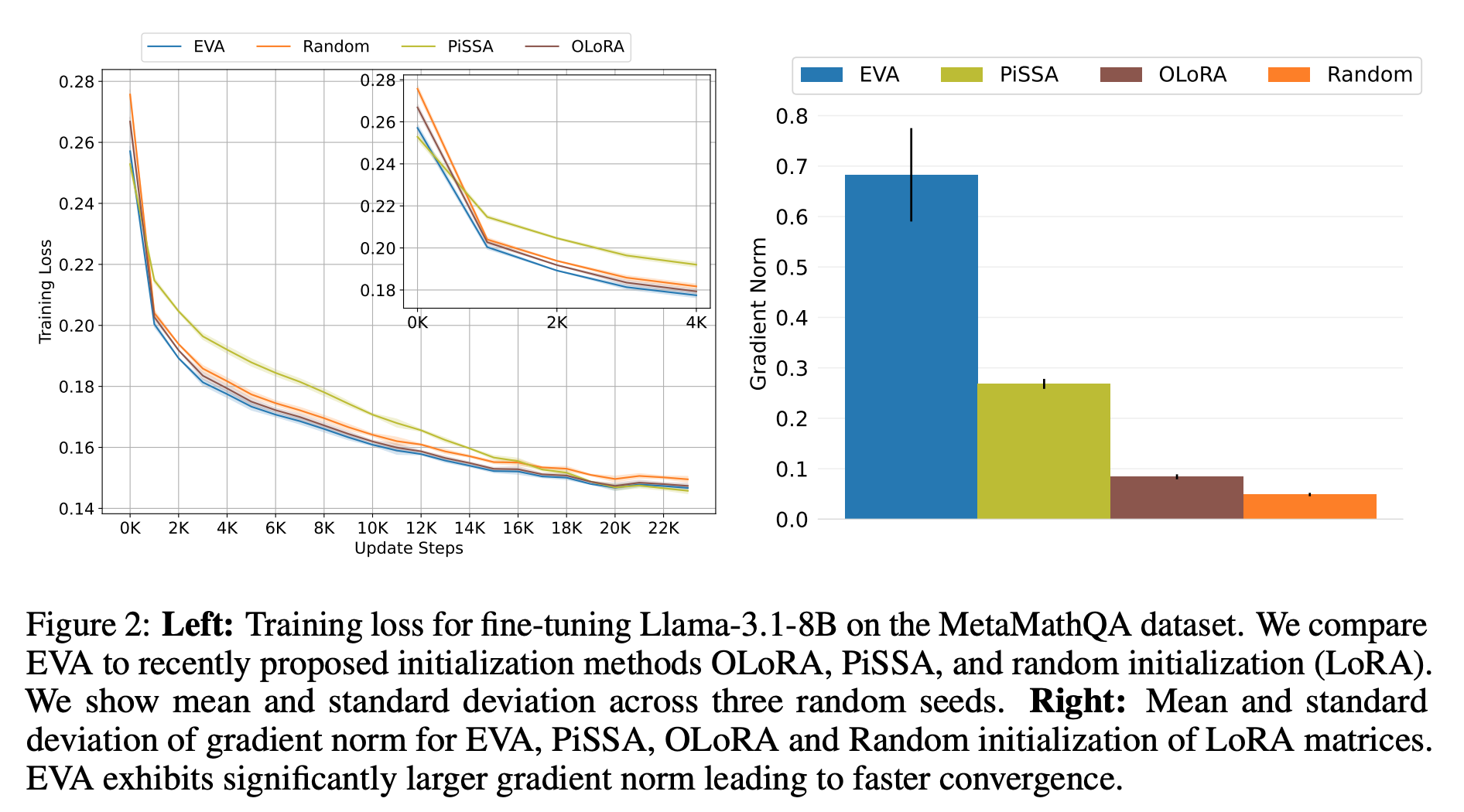

실험 결과