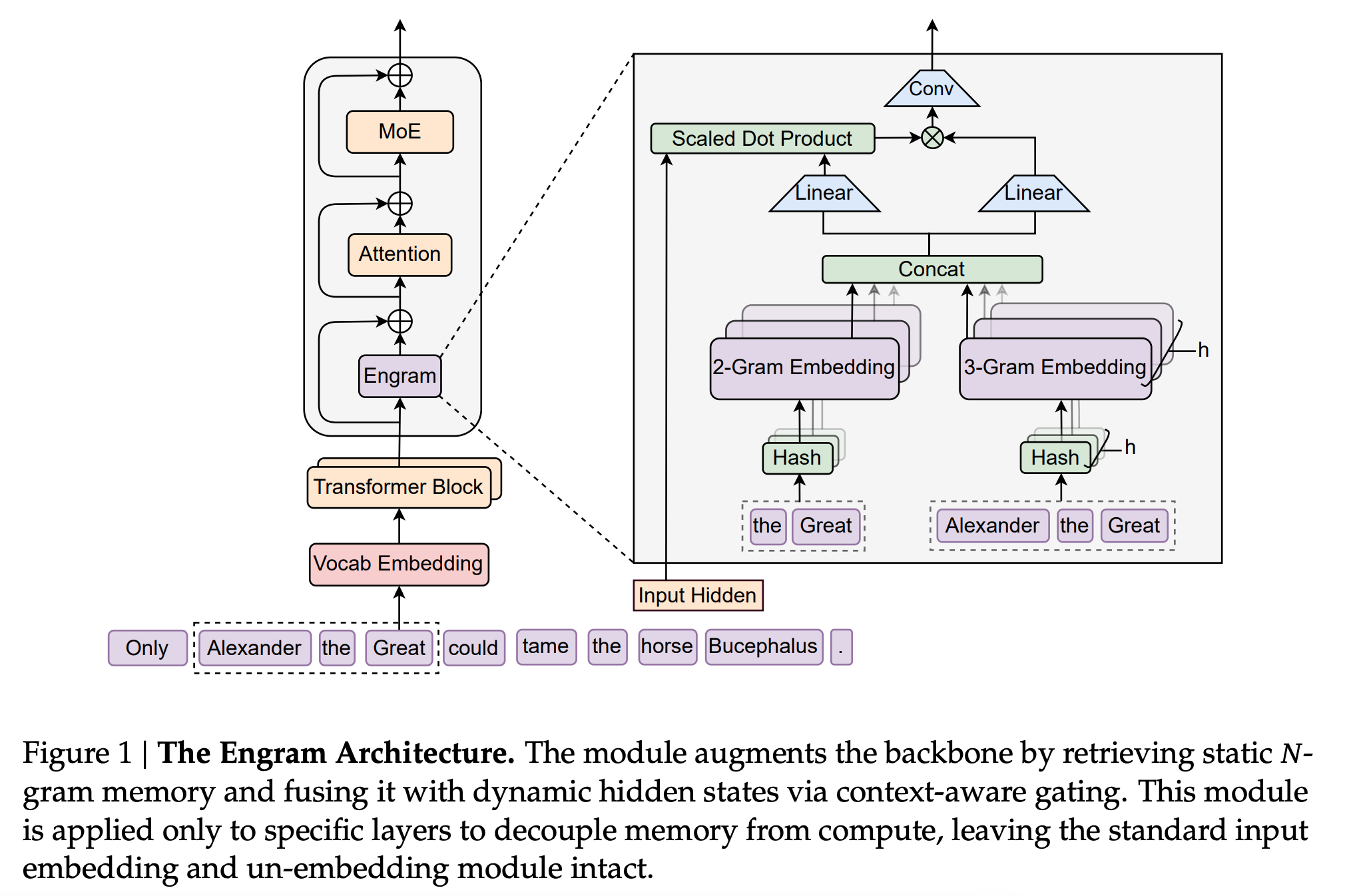

(논문 요약) Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models (Paper)

핵심 내용

- 2-gram, 3-gram 으로 hash-based embedding (trained end-to-end)

- Hash

- raw → canonical (all lowercase, etc)

- multiplicative-XOR hash

- concat all heads and 2-gram and 3-gram