(논문 요약) Does Fine-Tuning LLMs on New Knowledge Encourage Hallucinations? (Paper)

핵심 내용

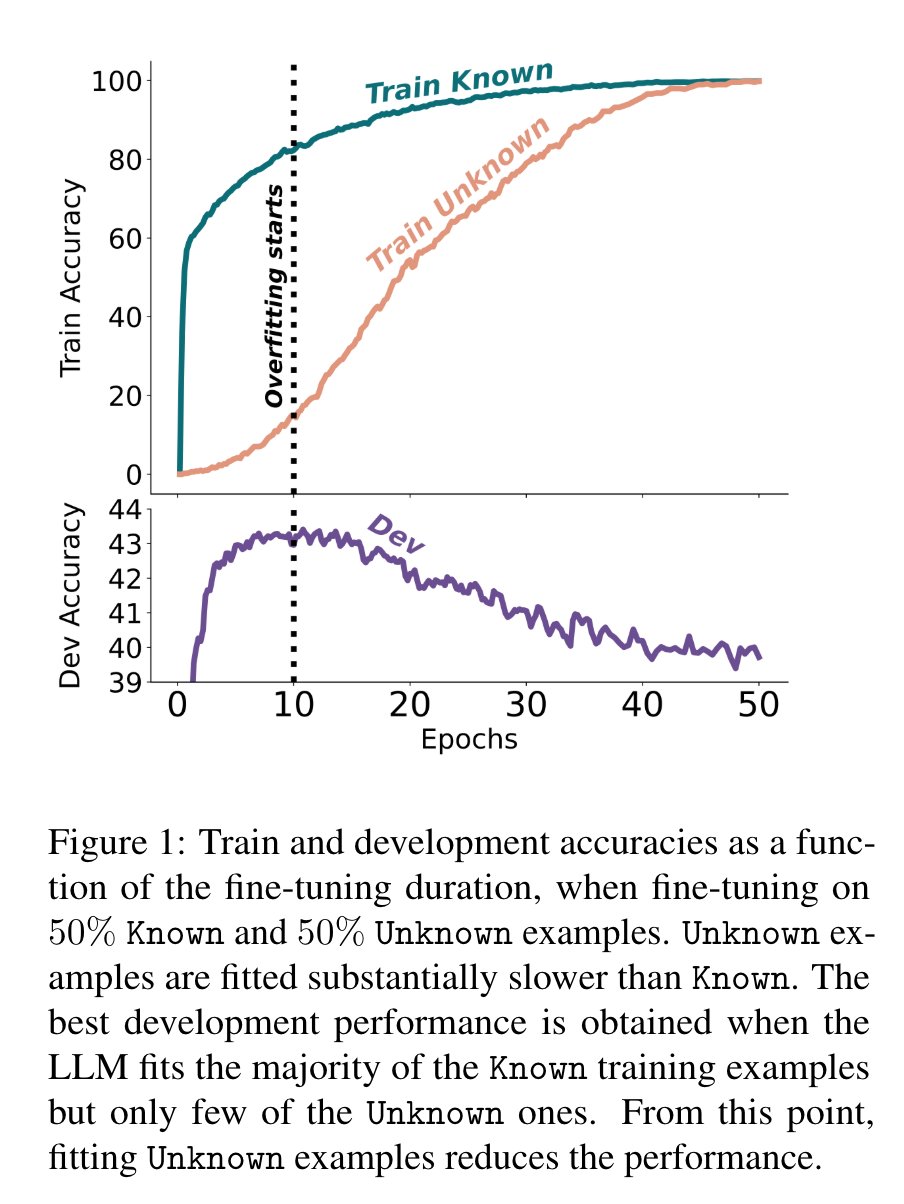

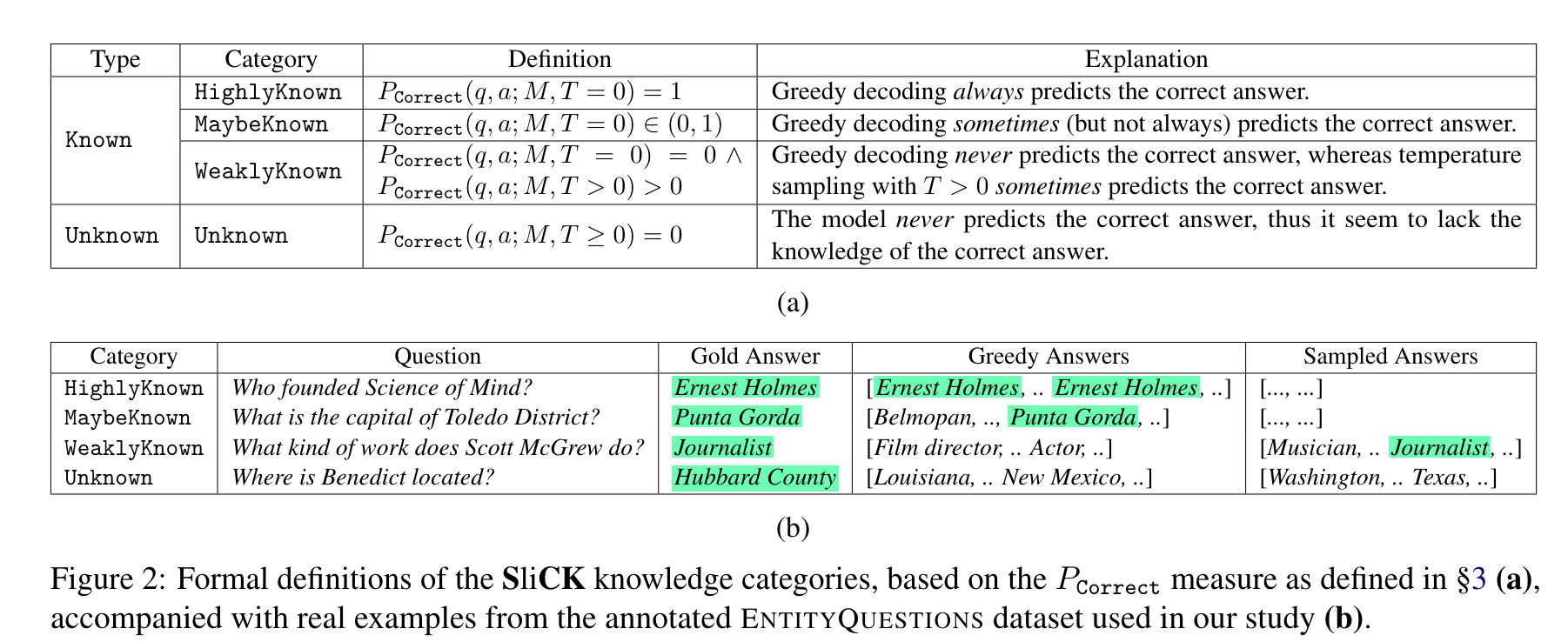

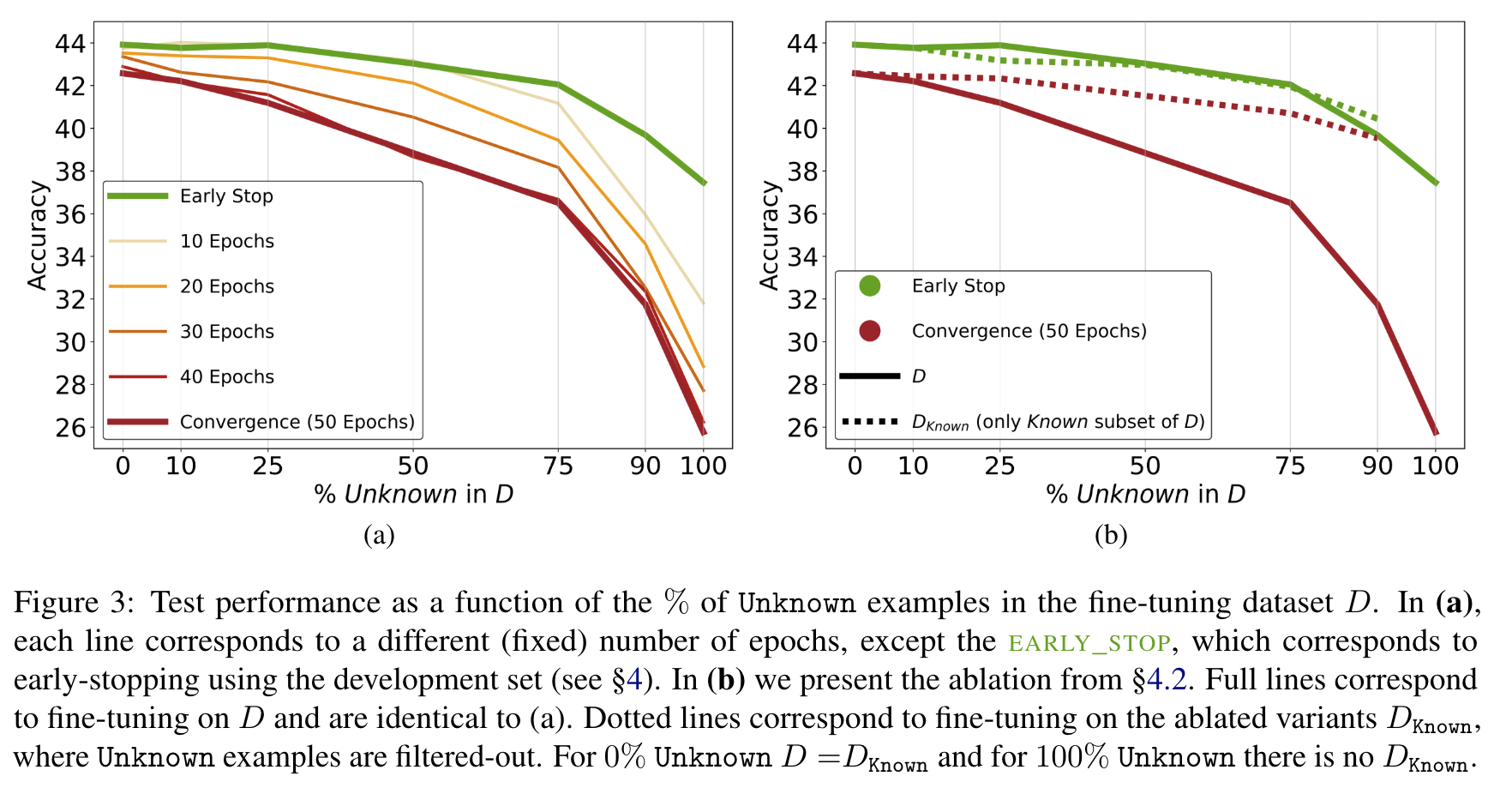

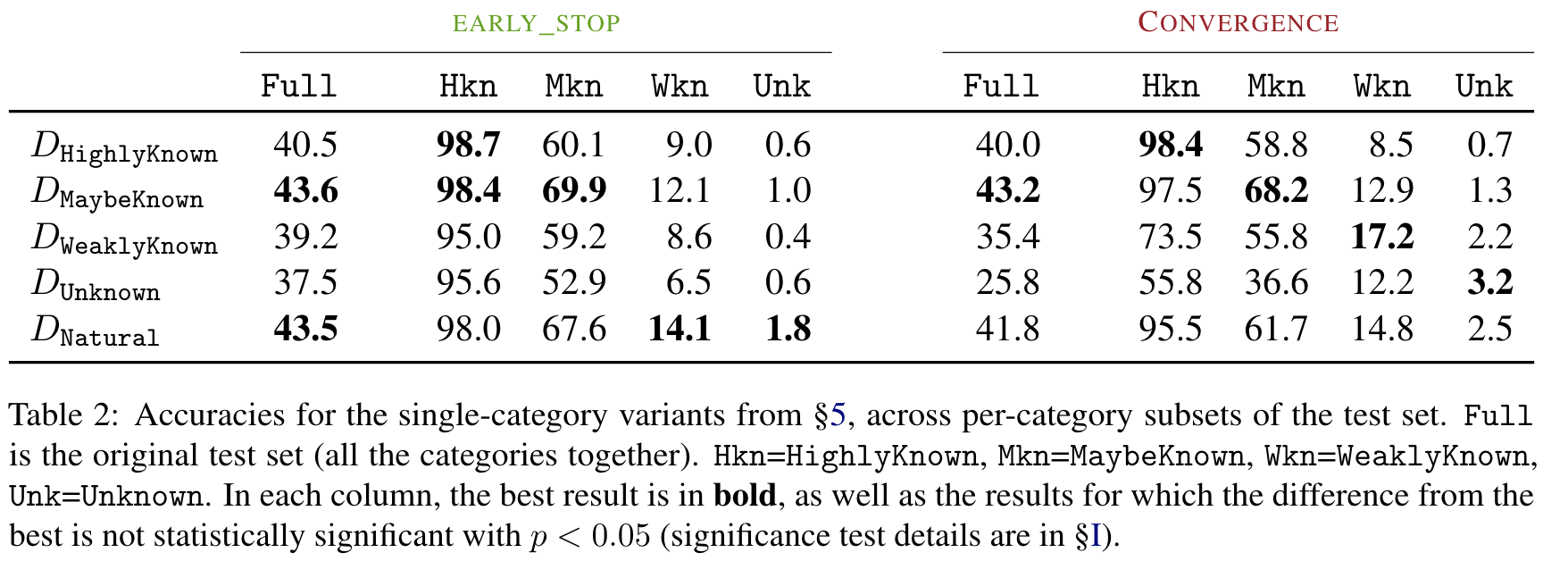

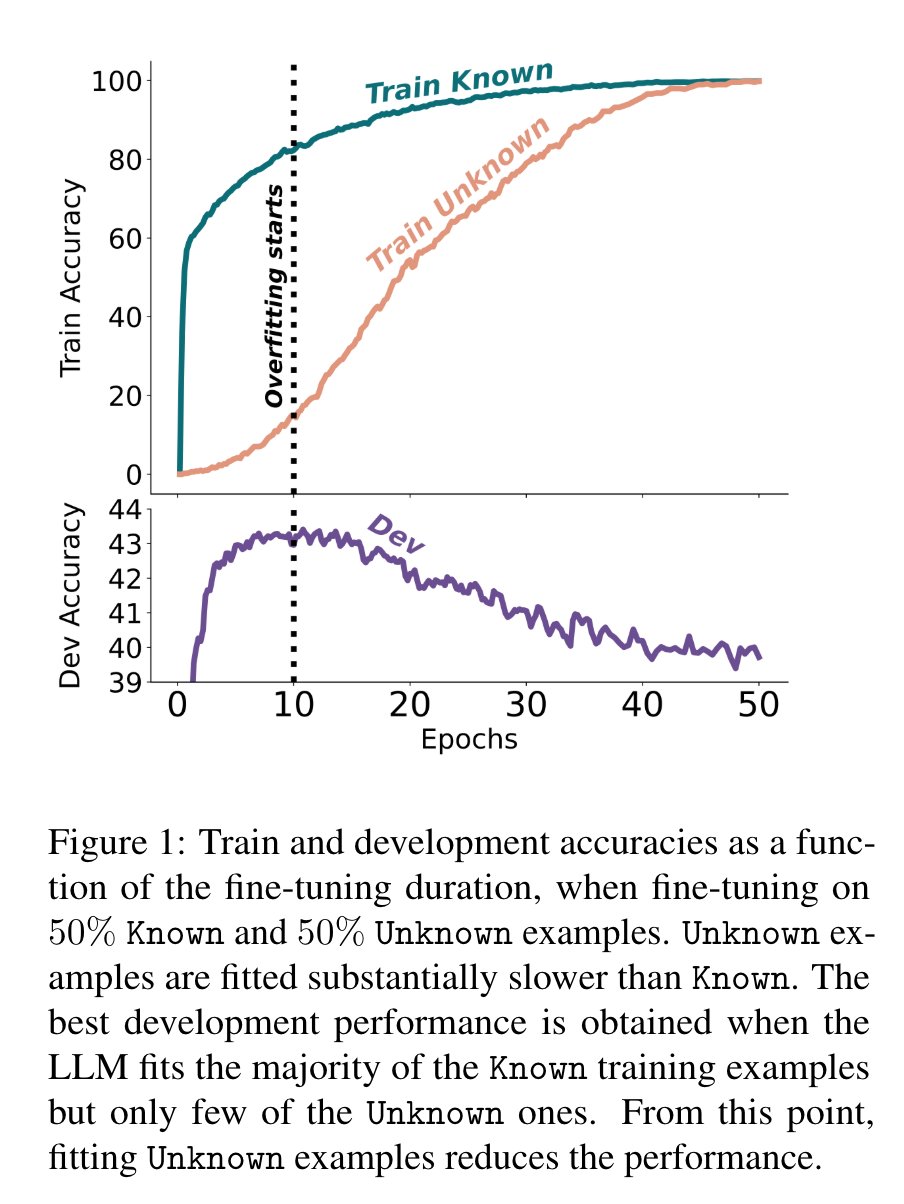

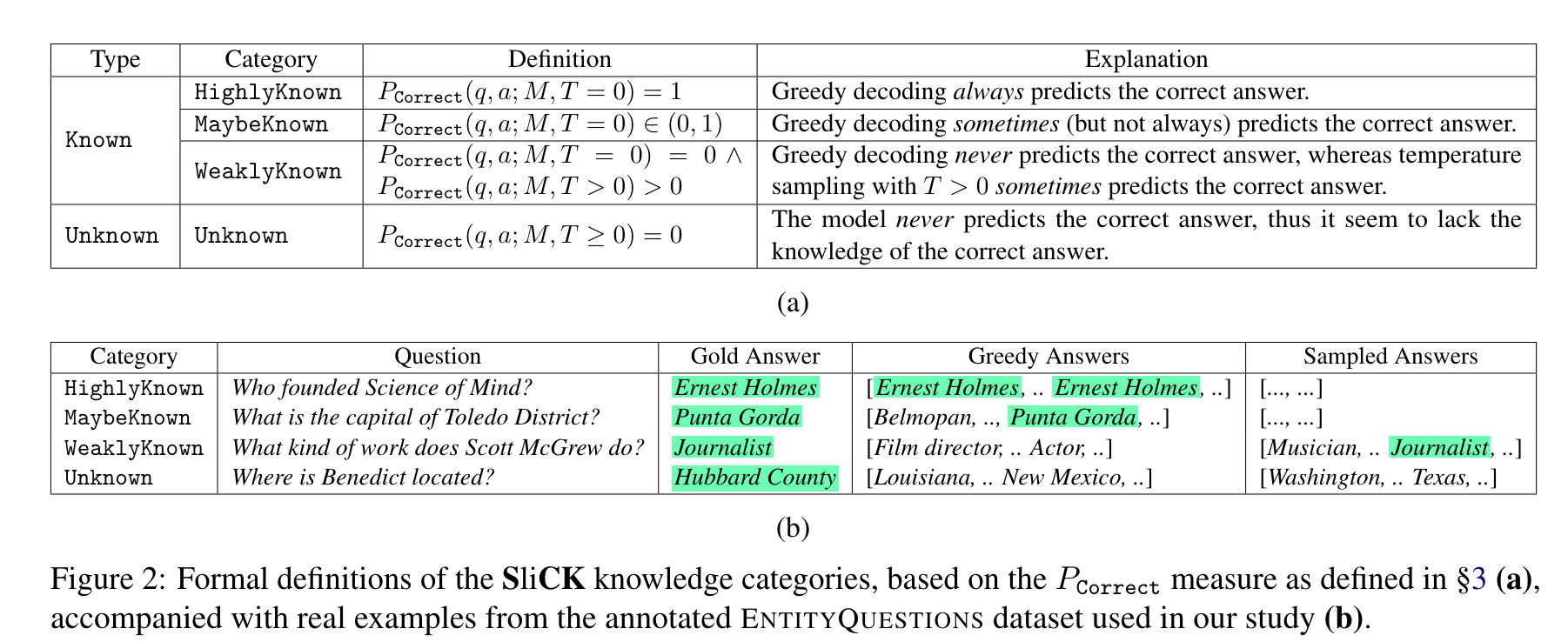

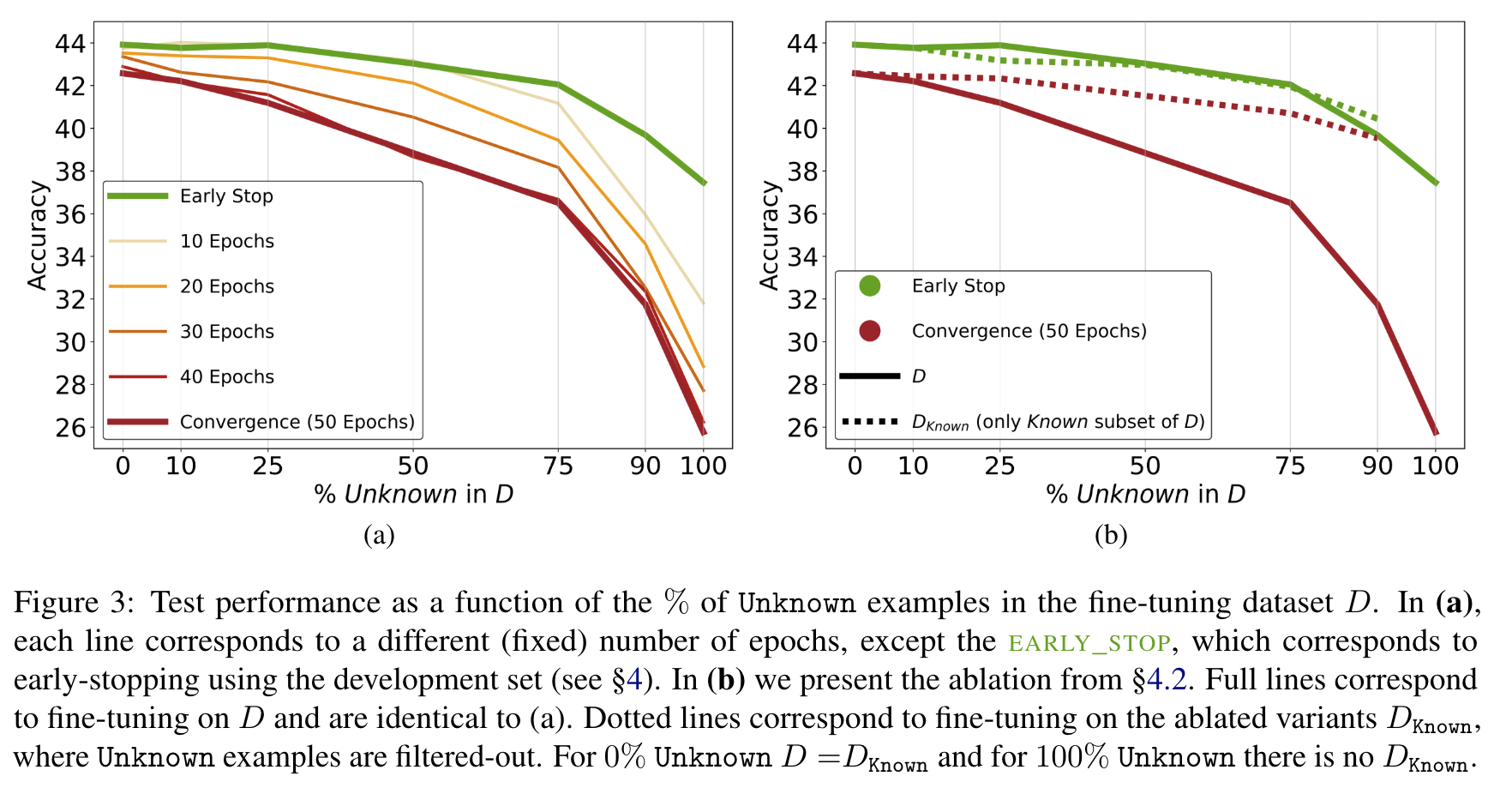

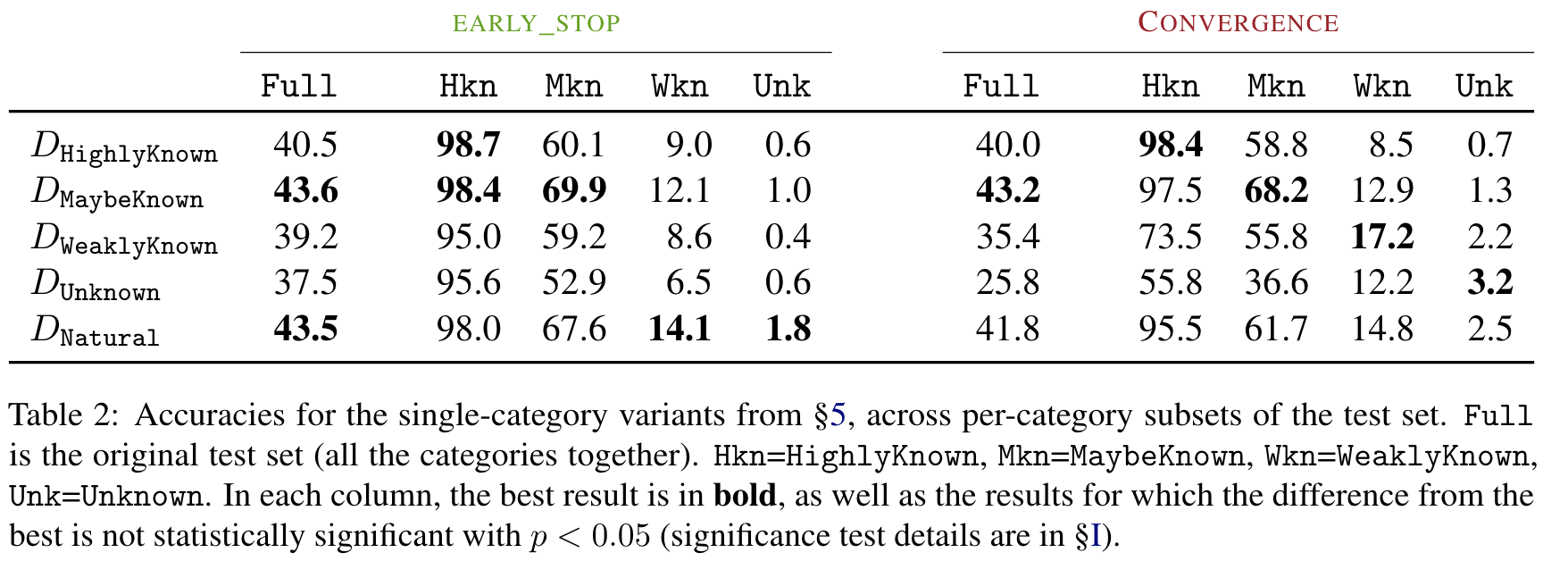

- large language models mostly acquire factual knowledge through pre-training, whereas finetuning teaches them to use it more efficiently

실험 결과

(논문 요약) Does Fine-Tuning LLMs on New Knowledge Encourage Hallucinations? (Paper)