(논문 요약) Byte Latent Transformer: Patches Scale Better Than Tokens (Paper)

핵심 내용

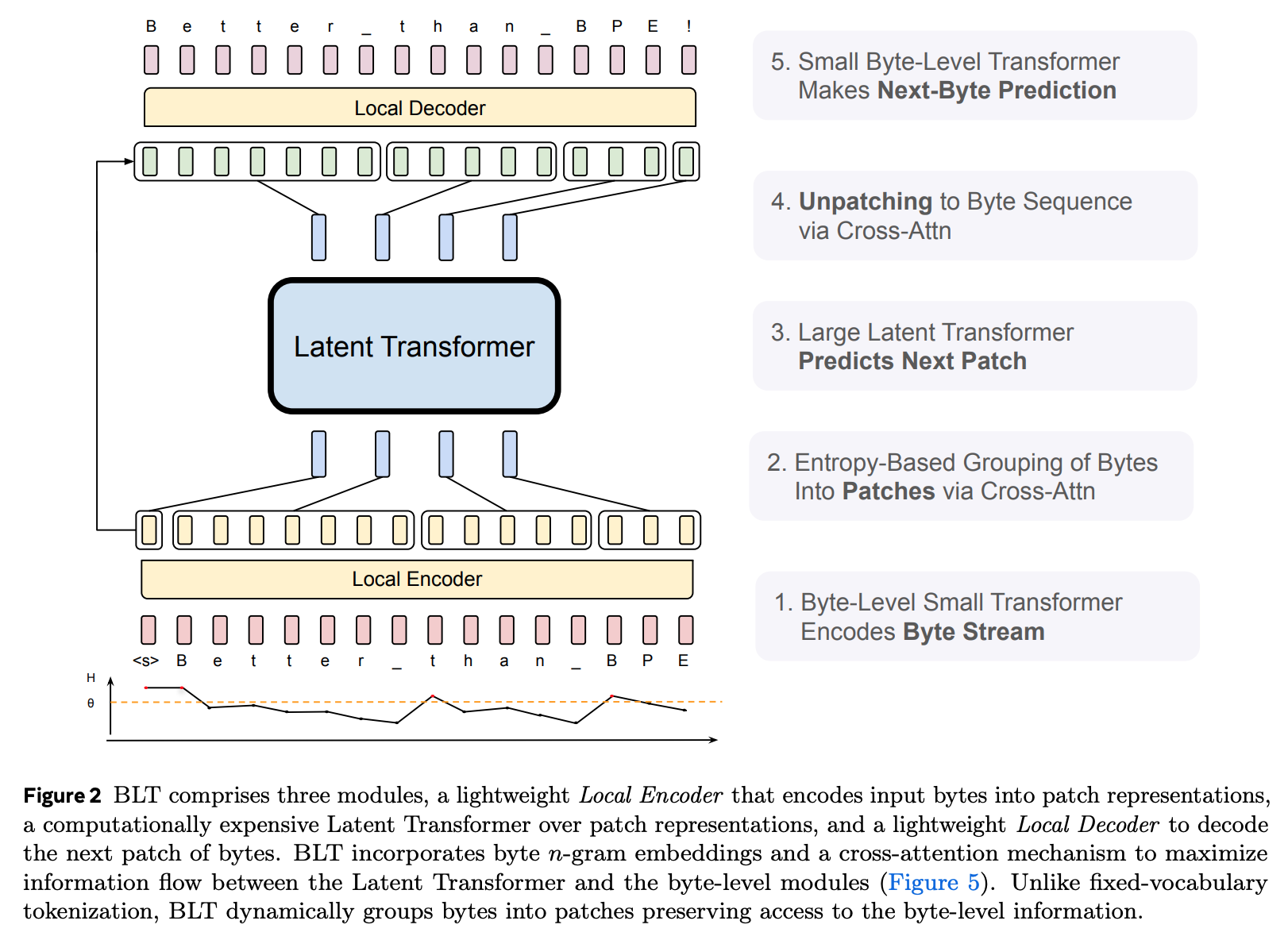

Tokenizer-free architecture that learns from raw byte data

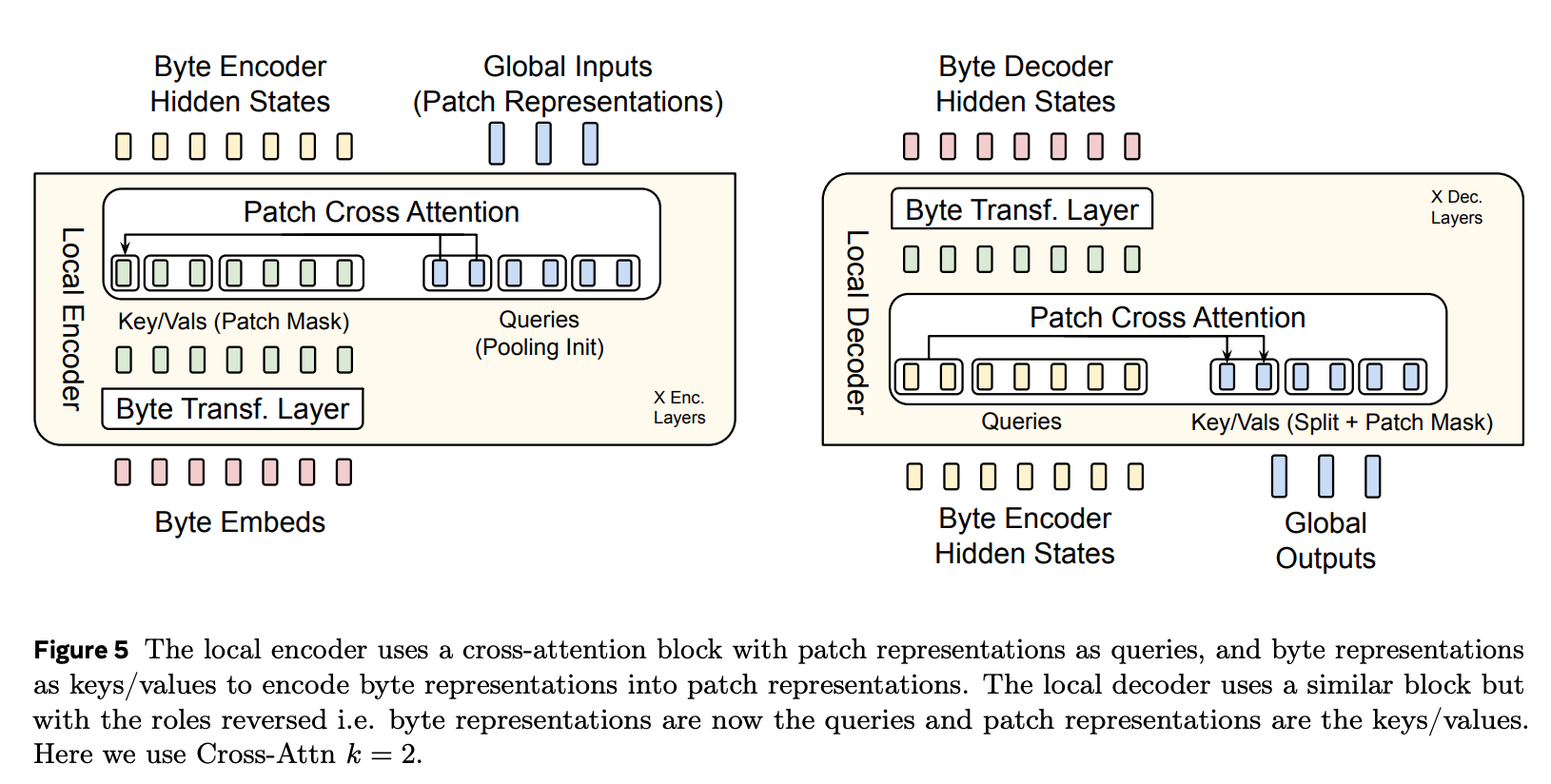

Encoder, Decoder

Entropy Patching: use entropy estimates to derive patch boundaries

- train a small byte-level auto-regressive language model on the training data

- global thershold 와 relative threshold 사용

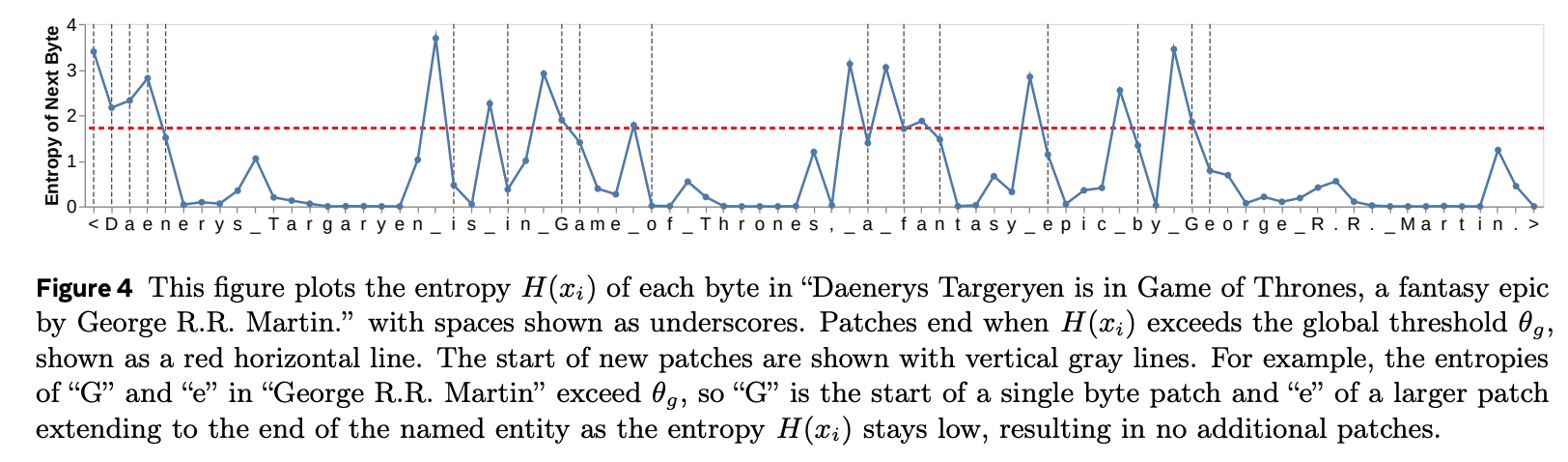

- entropy plot 예시

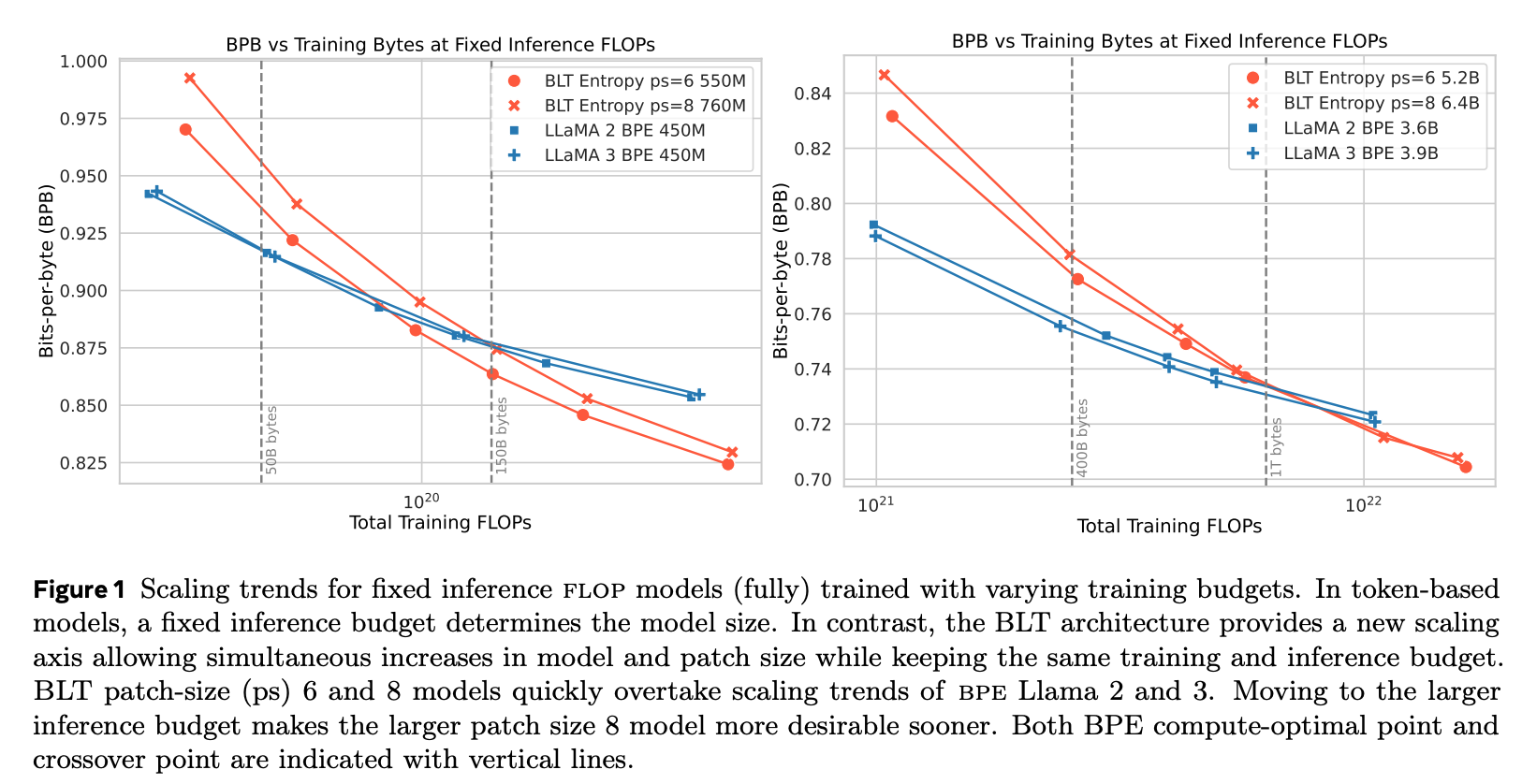

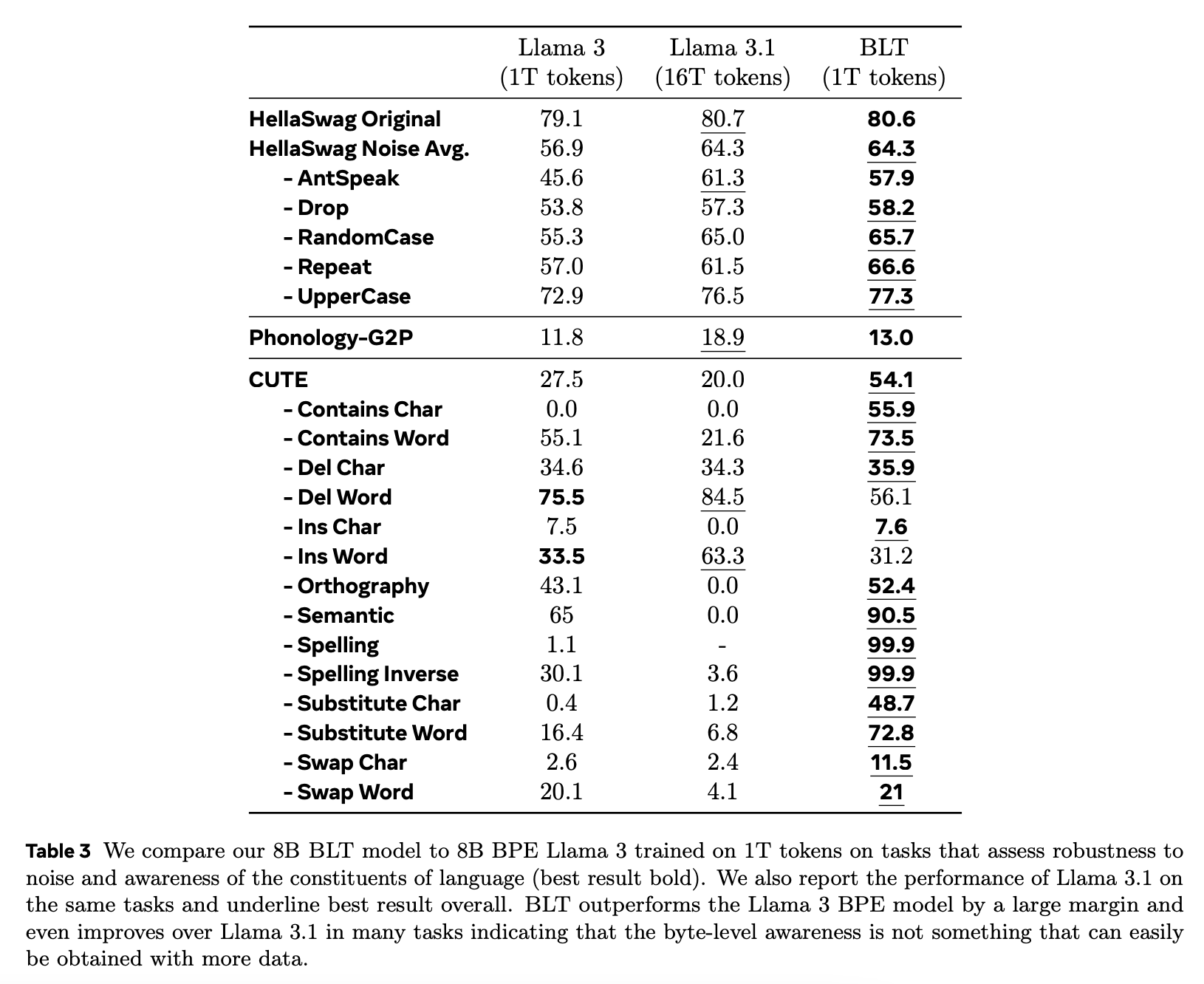

실험

- 적은 param, dimension 으로 성능을 냄.