(논문 요약) LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale (Paper)

핵심 내용

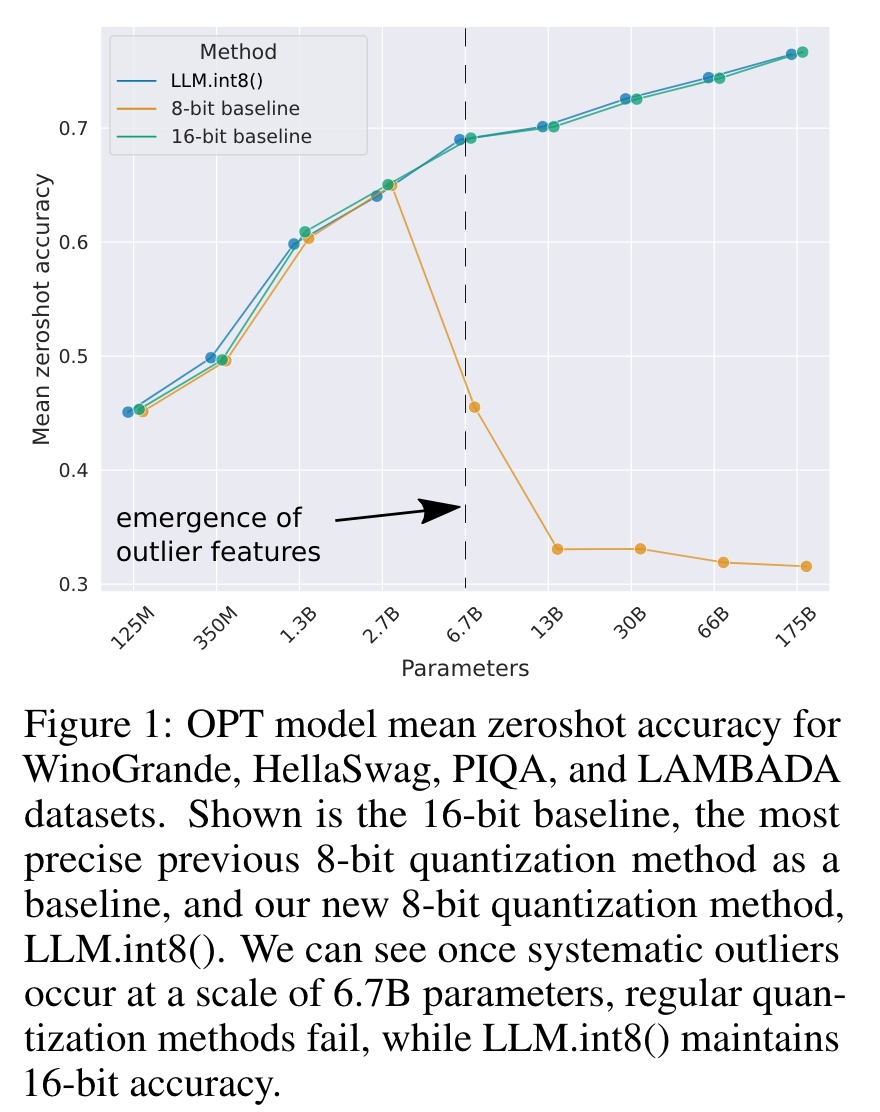

motivation: 8 bit 로 단순 양자화 했을때 성능 하락. outlier feature dimension 이 존재하기 때문.

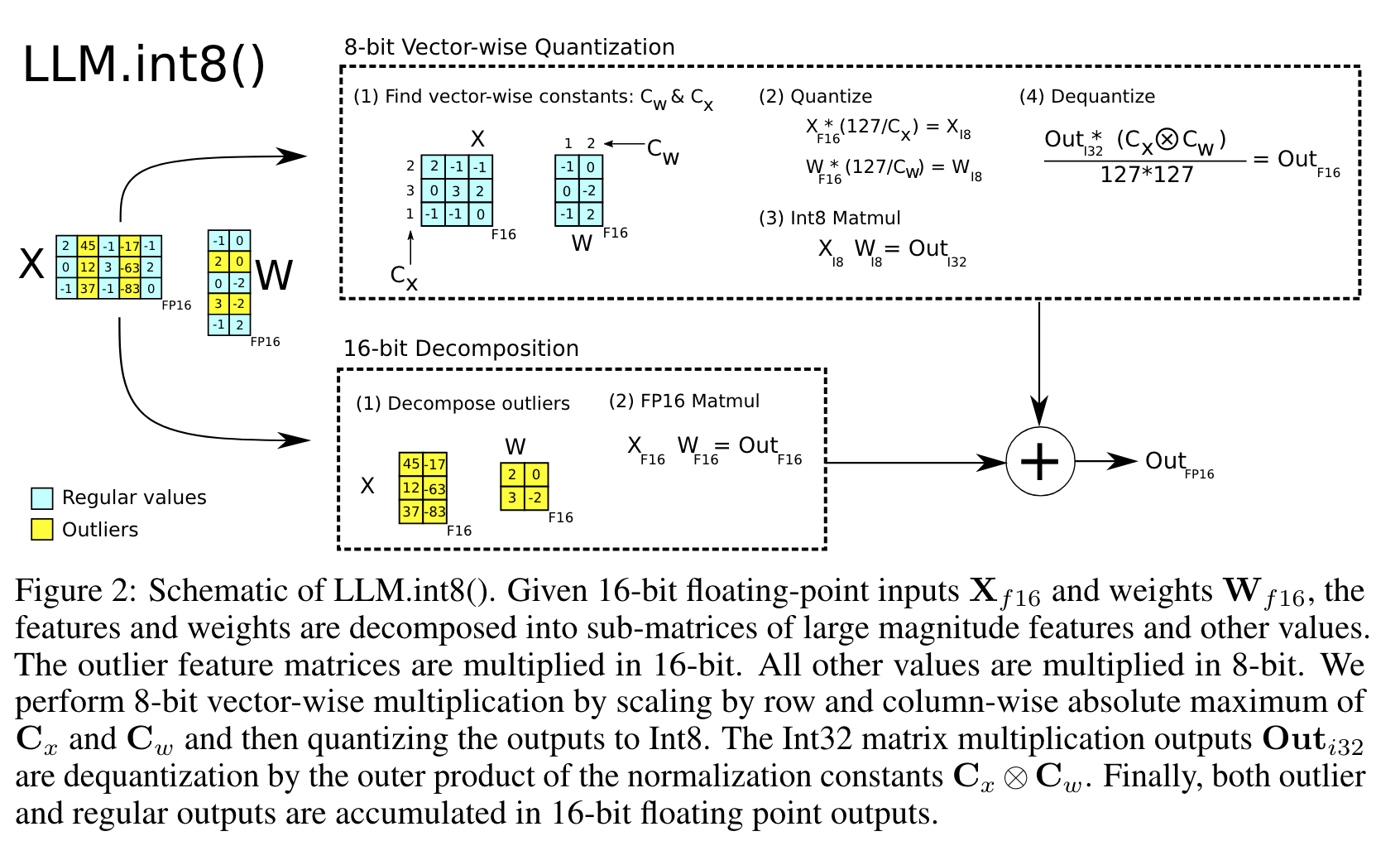

- method: outlier feature dimension (magnitude up to x20 larger) 을 분리.

- 16-bit matrix multiplication for the outlier feature dimensions

- 8-bit matrix multiplication for the other 99.9% of the dimensions

- outlier feature dimension: all dimensions, element of which have a magnitude larger than the threshold 6.0

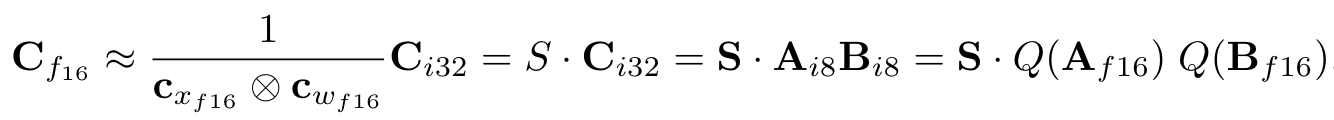

- vectorwise quantization:

$X_{f16}W_{f16}\in\mathbb{R}^{s\times o}, c_{X_{f16}}\in\mathbb{R}^s, c_{W_{f16}}\in\mathbb{R}^o$

$X_{f16}W_{f16}\in\mathbb{R}^{s\times o}, c_{X_{f16}}\in\mathbb{R}^s, c_{W_{f16}}\in\mathbb{R}^o$

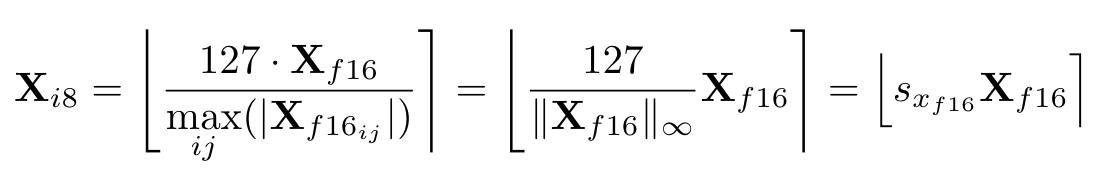

- 8-bit ([−127, 127]) quantization method

- Absmax:

- zeropoint:

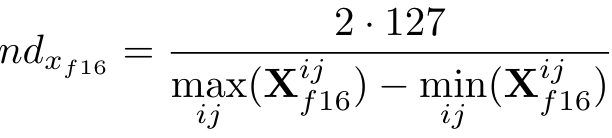

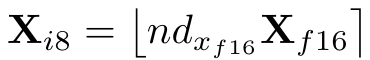

- Absmax:

실험 결과

- 0.5 곱한건 heuristic 인 듯함.