(논문 요약) BitNet

BitNet: Scaling 1-bit Transformers for Large Language Models The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits BitNet b1.58 2B4T Technical Report (repo)

핵심 내용

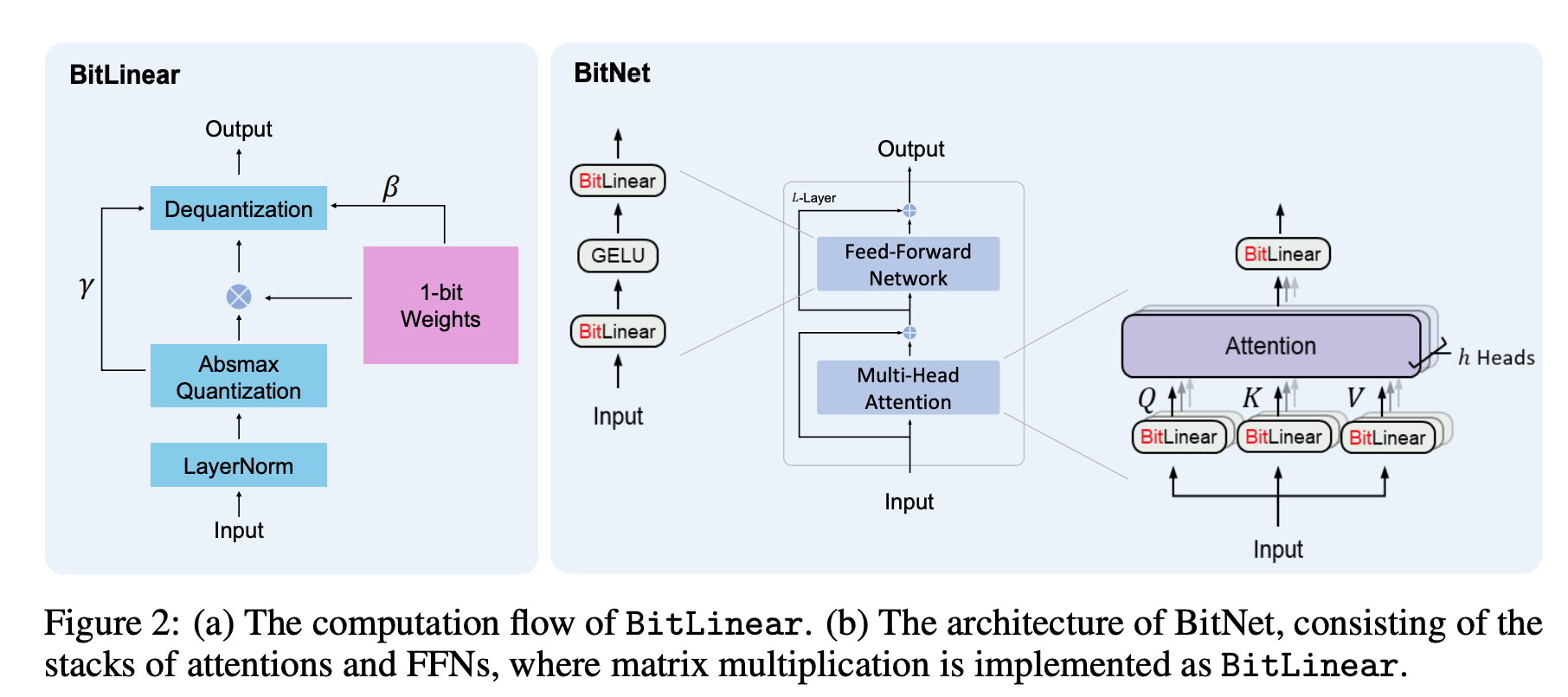

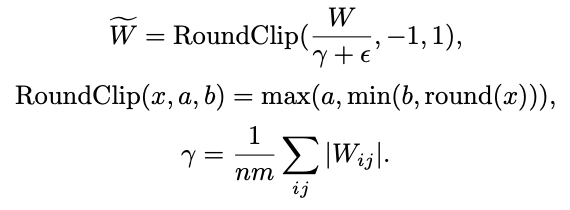

- {-1,0,1} weight matrix 로 muliplication 가속화.

- Weight quantization formula

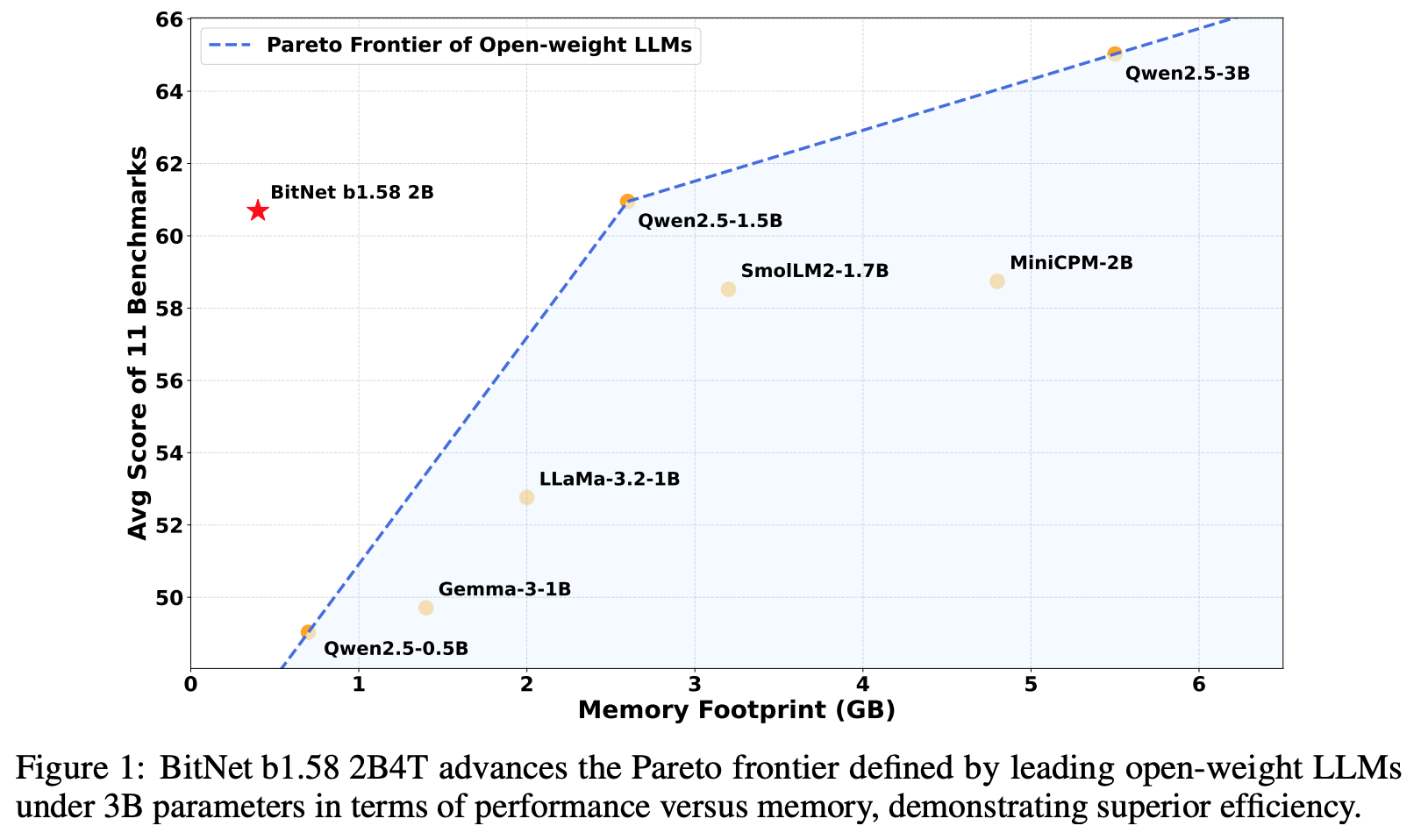

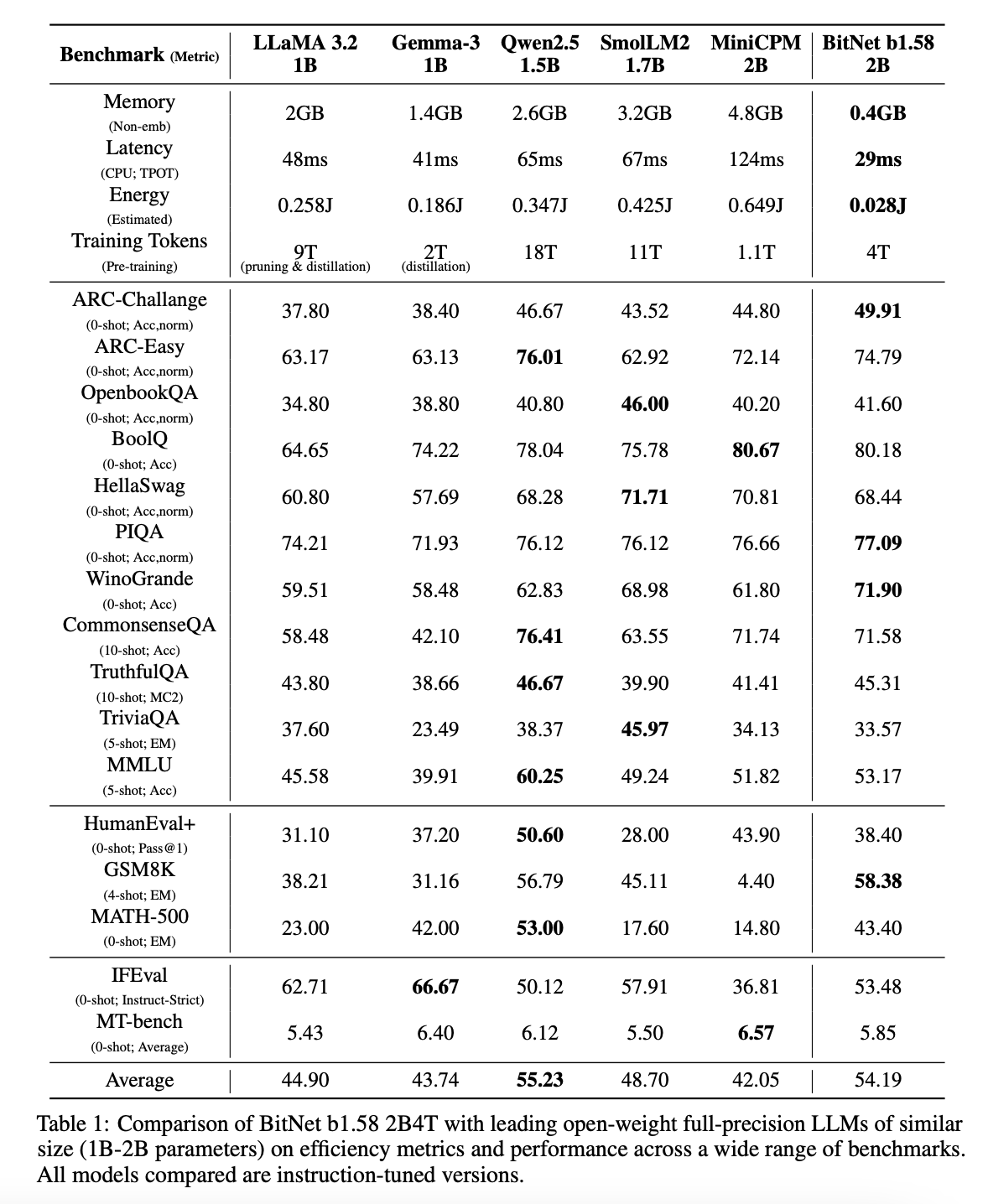

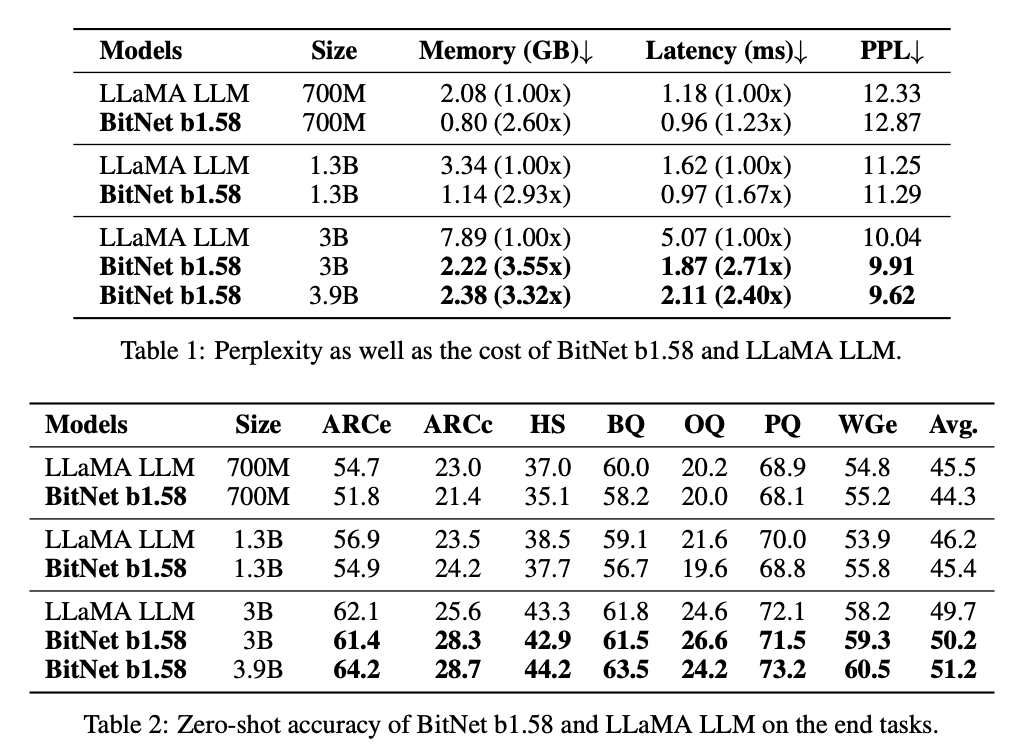

실험 결과

BitNet 2B 모델

- BitLinear 사용하여 pre-training, SFT, and DPO.