(논문 요약) Can LLMs Design Good Questions Based on Context? (paper)

핵심 내용

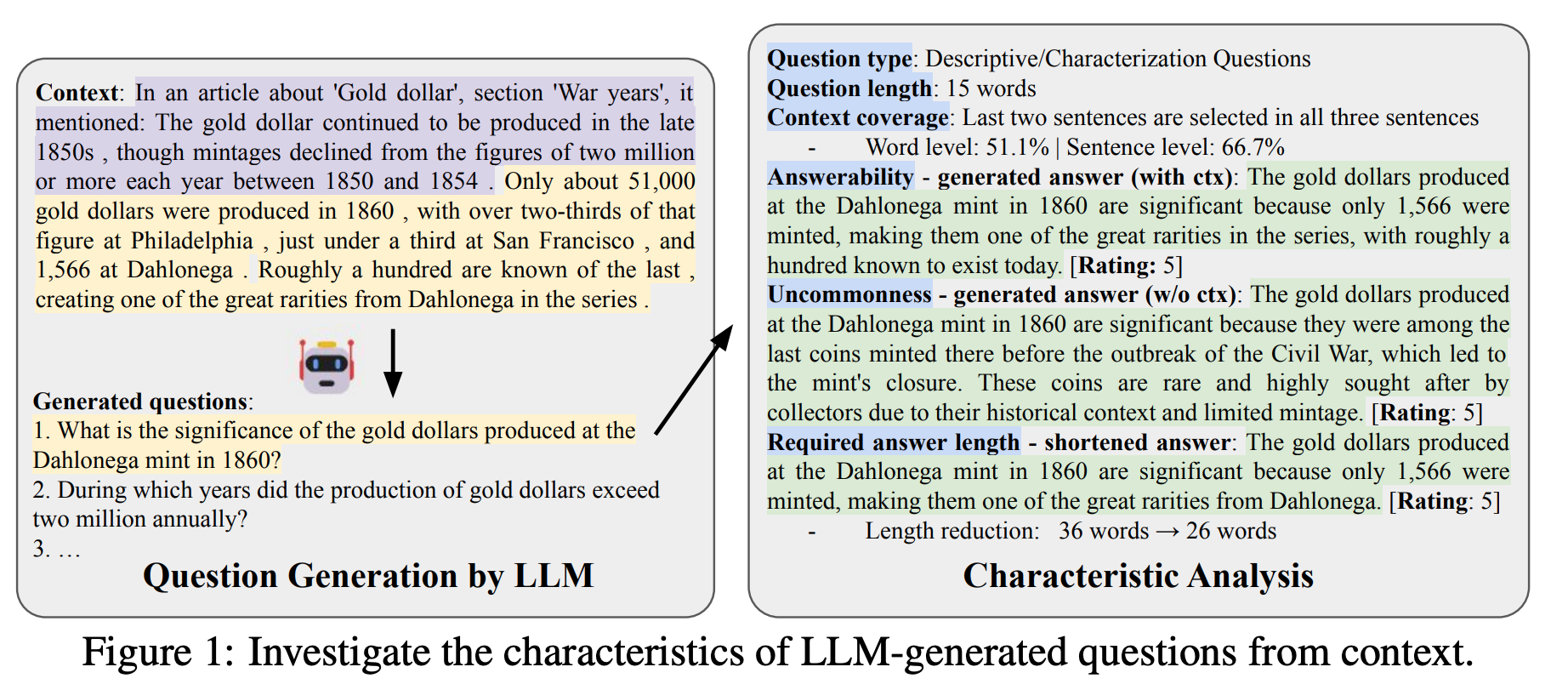

- LLM 으로 생성된 질문들은 어떻게 다른지 6가지 차원으로 분석.

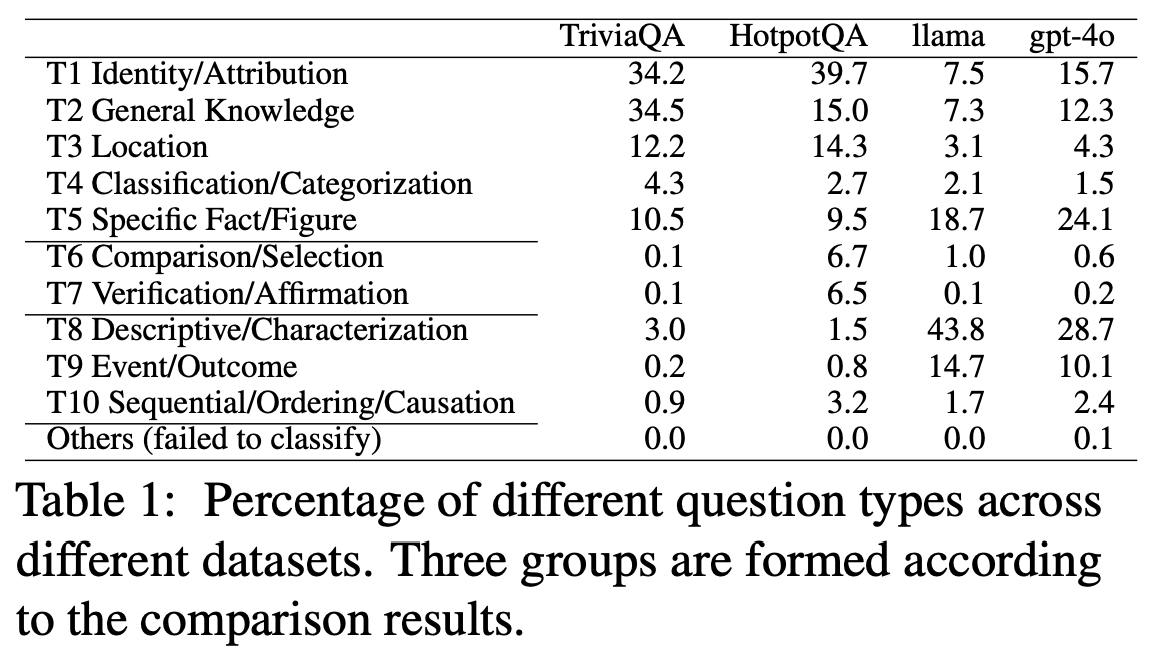

- Question type

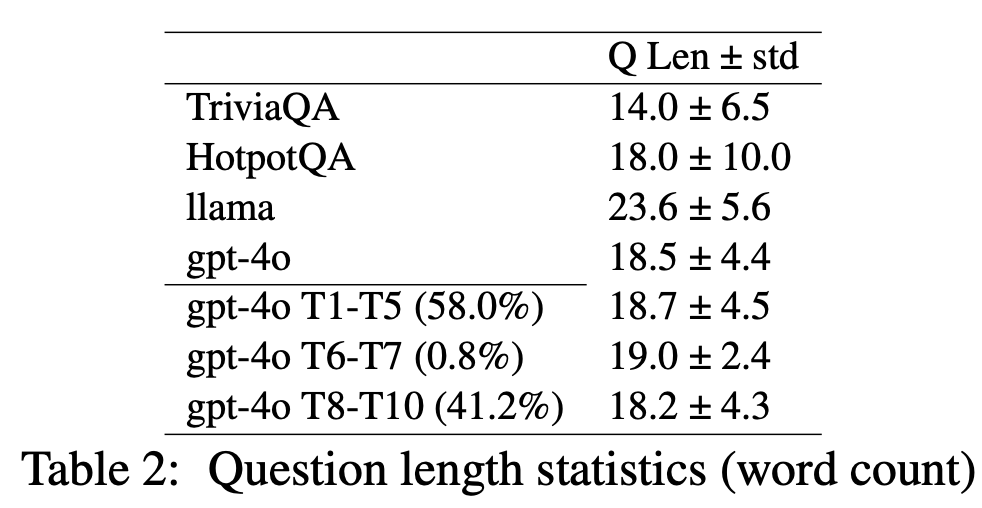

- Question length

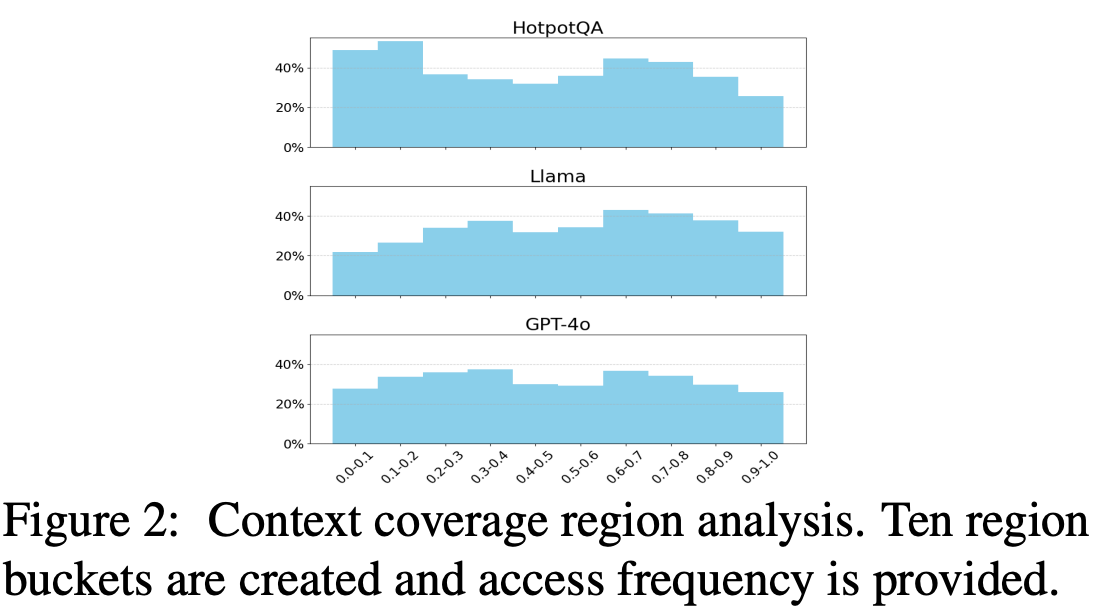

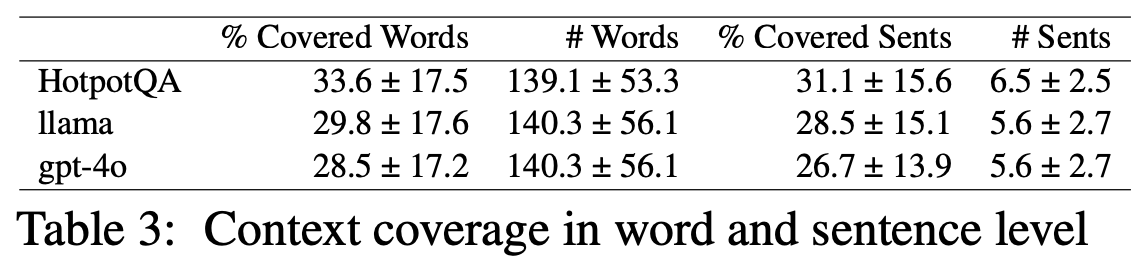

- Context coverage

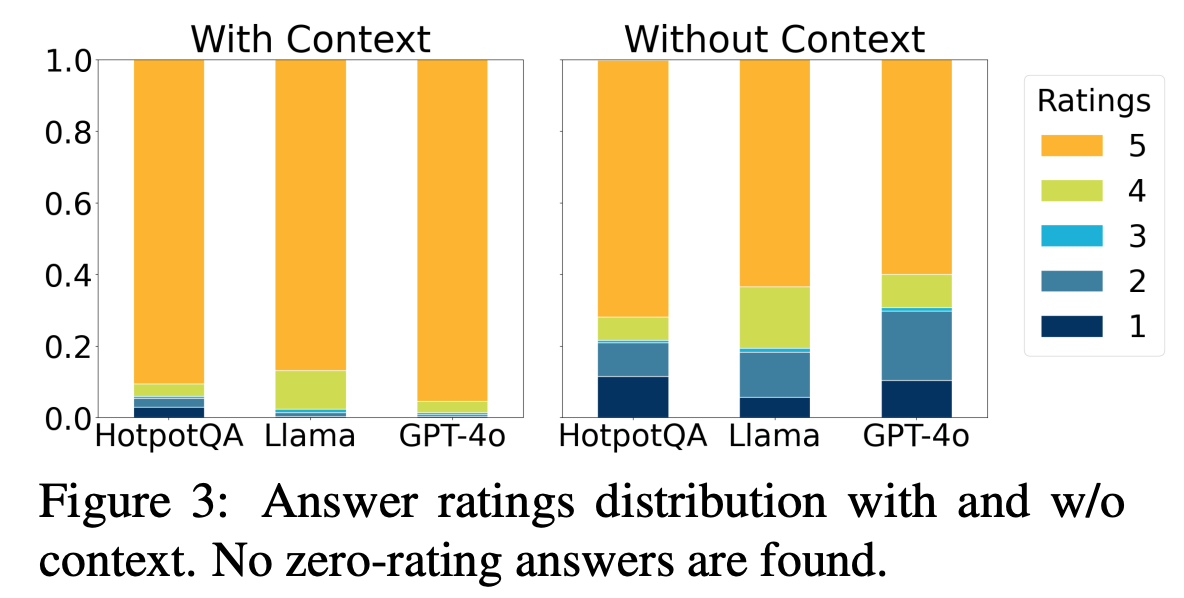

- Answerability

- Uncommonness

- Required answer length

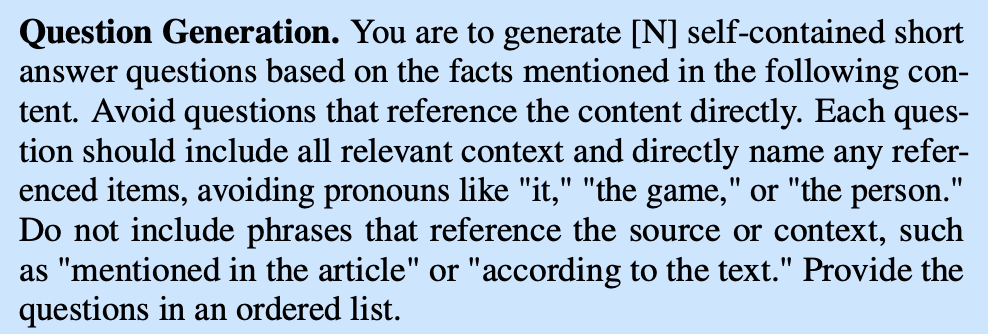

- prompt

실험 결과

a strong preference for asking about specific facts and figures in both LLaMA and GPT models

LLMs tend to exhibit distinct preferences for length

LLM-based question generation displays an almost opposite positional focus compared to QA

HotpotQA 와 비교큰 차이가 없으며, context 있으면 풀기 쉽고, 없으면 풀기 어려운 문제들을 생성.

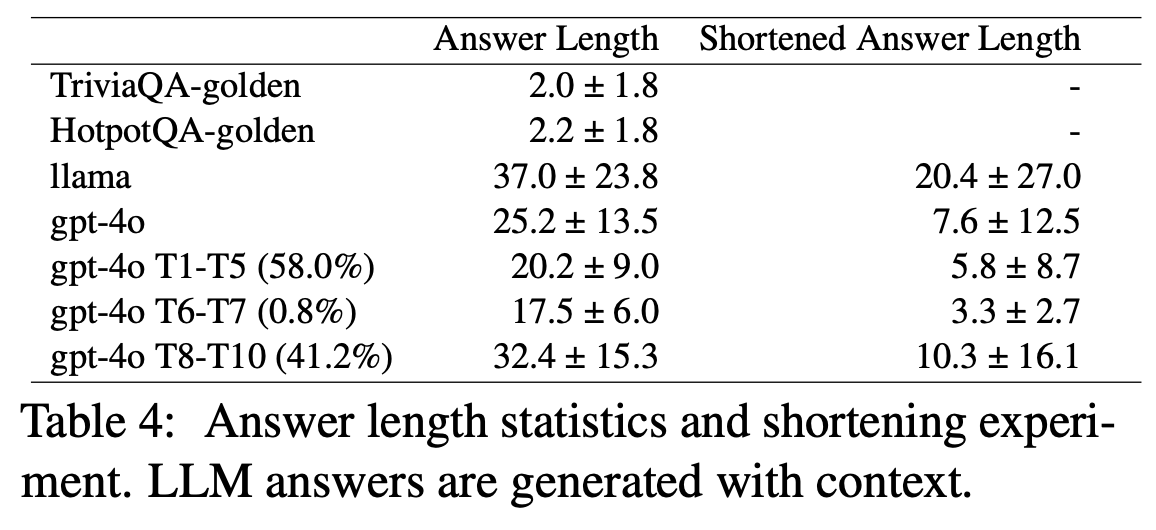

LLM-generated questions still require significantly longer answers